Containers

In this section we will take a look at Container technology, which has emerged as a key technology in shipping code and has had a huge impact on deploying applications on the cloud.

Containerization:

Containerization, also called container-based virtualization or application containerization, is an operating system level virtualization method for deploying and running distributed applications without launching an entire virtual machine for each application. Instead, multiple isolated systems, called containers, are run on a single control host and all the containers access a single kernel.

Containers Vs Virtual Machines:

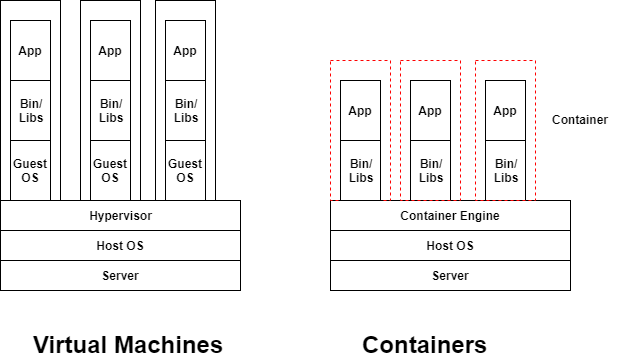

The diagram above illustrates the difference between virtual machines and containers.

Virtual Machines:

Virtual machines use hypervisor software which is installed on a physical server having an operating system. Each virtual machine created, will have its own operating system (‘Guest OS’ in the above diagram). Thus the additional layer of OS on each virtual machine consumes significant disk space and adds overhead.

Containers:

A container on the other hand, does not require an OS of its own, but rather packages only those libraries and binaries which are required for the application to run. Also, the libraries which are common to many containers could be shared (it uses an immutable file system technology), thus making containers light weight. Containers provide an isolated execution environment for the application to run.

Why do we need Containers?

1. Avoids library conflicts

Using containers help us to eliminate library conflicts. Let us consider a scenario, in which we have to deploy multiple applications on a virtual machine and each of them is a Python application. If each of these Python application needs a different version of Python, then there is a high chance of facing conflicts in the library files, leading to nasty bugs or installation issues. Containers help overcome this issue, since the container would package each of the applications, its run time dependencies and the corresponding libraries independently and hence will be isolated with no interference from other containers.

2. Memory Isolation

If multiple applications are running on a virtual machine, it is possible that one application can advertently/inadvertently write into the memory area (RAM) used by the other application, leading to data loss, data theft, or corruption. Containers provide a separate run time environment for the contained application and it would not allow any other application outside the container to access it.

3. Ease of deployment across various environments

While deploying an application, there could be a series of complex steps to be followed to install the libraries including, dependencies, use of right version of libraries, managing the configurations etc. Containers help make these laborious tasks simpler, by creating an image through scripts for the above steps and the image could be installed in any environment. This removes installation or deployment errors and also eliminates manual interventions, thereby improving quality of overall deliverables.

Container Lifecycle:

Let us take a look at a typical container lifecycle. A container undergoes through a state transition as below:

- Created – When a container is first created from an image, it will be in a state ‘Created’.

- Running – When a container is started, it goes to the state ‘Running’. In this state, the applications in the container also start running (depending on the startup command provided which would be provided while starting the container).

- Stopped – When a container is stopped, it goes to the state ‘Stopped’. The container could be started again, from the same container image.

Containers provide network interfaces to access the applications hosted within the container through an IP address and a port. Containers images could be uploaded to a registry (there are public registries or corporates could setup their own registry).

For instance, Docker provides a public registry, known as DockerHub, where we can find images of most of the commonly used soft wares.

Volumes:

Volumes in a container terminology are storage drives which could be attached to a container instance. Containers are generally stateless and only maintain the state in the RAM, while they are running. Once they are stopped, the state is no longer available. This works fine for applications (for example web application servers, which do not require state to be persisted). However, let us say, we want to run a database like Mongo DB in a container. In such a scenario, we will definitely need a way to persist the container state. Volumes come in handy, in such a scenario. Volumes allow us to attach a storage drive to a container and the application running in the container can write to the volume similar to they write to a local file system.

Why are Containers so important in Cloud?

With the advent of cloud, where, we will have several instances of an application in a cloud environment, it would be very tedious to setup the application runtime environments in all the machines. Also, in certain, cases, where we would need to switch deployment platforms, or switch cloud platforms, it could get extremely painful if we need to perform the environment setup all over again.

Containers come to our rescue here, since; we can containerize all our applications and deploy it in whichever environment we would like to. Since the application dependencies are packaged within the container, we would not have to bother about setting up environments again and again. Also, if there is some change to setup, we can just update the base image of the container and deploy the updated container image to all machines.

Reference Implementations of Container Technologies:

Some of the popular container implementations are Docker, Core OS.